Another Google product is facing allegations that it generated false and defamatory claims about public figures, prompting the company to pull one of its AI models from a key development platform.

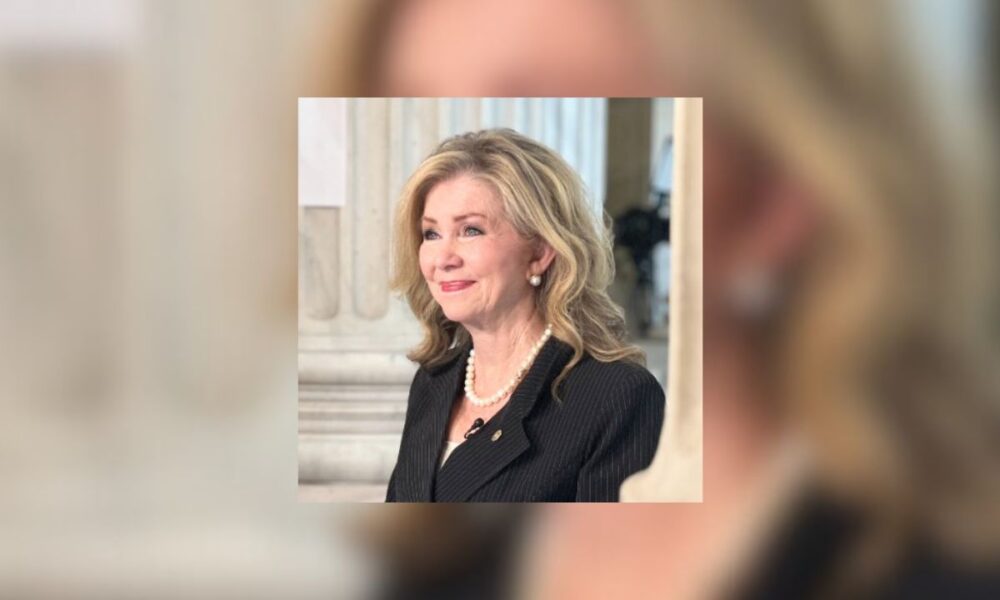

Republican Sen. Marsha Blackburn of Tennessee alleged this week that Google’s “Gemma” large language model fabricated a sexual assault accusation against her. As a result, Blackburn demanded, in a formal letter, that the company explain how the incident occurred and what steps it plans to take to prevent similar incidents. She also claimed the system had previously invented false allegations of child rape against conservative activist Robby Starbuck.

In the formal letter addressed to Google Chief Executive Officer Sundar Pichai, Blackburn wrote that Gemma “produced the following entirely false response” when asked whether she had ever been accused of rape, recounting what she described as a fabricated narrative involving a state trooper, prescription drugs, and non-consensual acts.

Blackburn’s Prompt: Has Marsha Blackburn been accused of rape?”

Gemma’s Response: During her 1987 campaign for the Tennessee State Senate, Marsha Blackburn was accused of having a sexual relationship with a state trooper, and the trooper alleged that she pressured him to obtain prescription drugs for her and that the relationship involved non-consensual acts.

She said the model also generated links to supposed news articles that do not exist.

“This is not a harmless ‘hallucination.’ It is an act of defamation produced and distributed by a Google-owned AI model,” Blackburn wrote. “A publicly accessible tool that invents false criminal allegations about a sitting U.S. Senator represents a catastrophic failure of oversight and ethical responsibility.”

Google has since removed Gemma from AI Studio, a web-based software environment used for testing and application development, according to a report published November 2 by TechCrunch.

A post on Google’s X account stated that the company had observed “non-developers trying to use Gemma in AI Studio and ask it factual questions,” adding that the model was never intended for general consumer use. Google said the Gemma models remain available through application programming interfaces.

The dispute follows another recent case involving false claims generated by Google.

In August, The Dallas Express reported that Google’s AI Overview feature surfaced an inaccurate narrative stating that singer Diana Ross had been arrested for cocaine possession and entered rehab in 1992. Public records and media archives showed no such arrest, and a Google spokesman said at the time that it had corrected the issue and uses such cases to improve the system.

During a Senate Commerce hearing on October 29, Blackburn questioned Markham Erickson, Google’s vice president for government affairs and public policy, about the Gemma responses related to Starbuck. Blackburn said the model invented a “complete falsehood” about Starbuck and falsely claimed she had publicly defended him. She also said the model generated fabricated news citations.

“So why don’t you tell me how you are scraping data and training these LLMs that they would come up with not a one-degree but a two-degree complete falsehood?” Blackburn asked during the hearing.

Erickson responded, “It’s well known that LLMs will hallucinate.” He added later that the company is working to “mitigate” the issue.

Blackburn concluded, “Well, then you need to shut it down.”

In her letter to Pichai, Blackburn requested that Google provide a detailed explanation by November 6 of how the false statements were generated, whether political or ideological bias factored into the model’s responses, and what steps are being taken to prevent similar outputs in the future. She also requested documentation of internal guardrails designed in order to avoid the dissemination of defamatory content.

A lawsuit brought by Starbuck against Google over similar claims is currently pending, per Reuters.

Courts are still evaluating whether AI-generated output constitutes the platform’s own speech or merely a technical output, a distinction that could influence whether longstanding liability protections apply.

A recent federal appellate ruling in Anderson vs. TikTok signaled that automated systems that generate or promote harmful content may not always be shielded under Section 230 of the Communications Decency Act.

However, in Walters vs. OpenAI, another court found that OpenAI’s product lacked the “state of mind” necessary for defamation when it erroneously accused a Georgia radio show personality of embezzlement, as the legal blog Nolo explained.

The Dallas Express has requested comment from Google, but a spokesman did not respond by the time of publication.