Meta CEO Mark Zuckerberg has announced significant changes to the company’s content moderation policies across platforms like Facebook, Instagram and Threads.

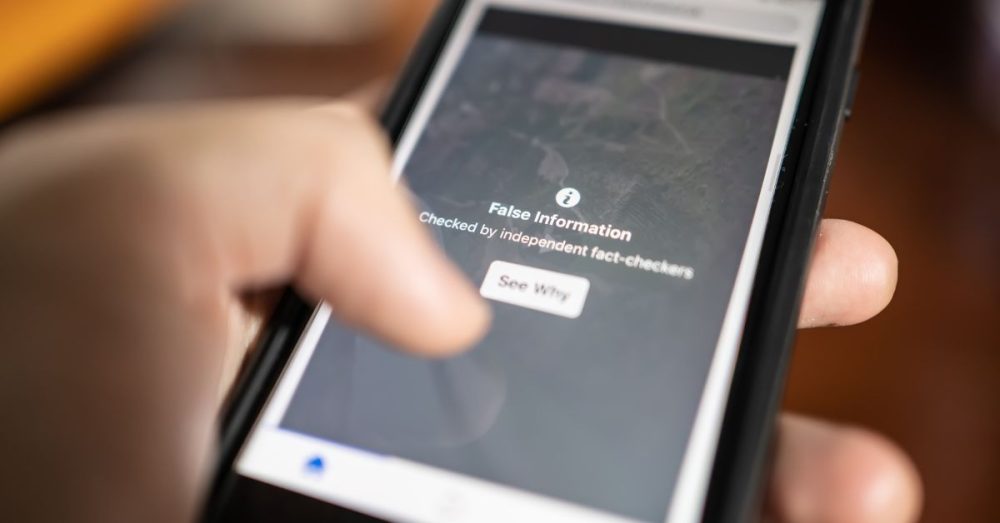

The company will discontinue its external fact-checking program, replacing it with a crowd-sourced system similar to the “Community Notes” feature on Elon Musk’s X (formerly Twitter). This shift aims to reduce perceived censorship and promote free expression, according to the Guardian.

Zuckerberg stated that fact-checkers have been “too politically biased” and have “destroyed more trust than they’ve created.”

Meta’s dramatic about-face over policing political speech comes less than two weeks before President-elect Donald Trump, an outspoken critic of social media censorship, takes office for a second time.

Zuckerberg delivered the news of the changes via video distributed by Meta.

Here is the full video from Mark Zuckerberg announcing the end of censorship and misinformation policies.

I highly recommend you watch all of it as tonally it is one of the biggest indications of “elections have consequences” I have ever seen pic.twitter.com/aYpkxrTqWe

— Saagar Enjeti (@esaagar) January 7, 2025

He emphasized the company’s commitment to free expression, noting that the changes are an attempt to return to the principles outlined in his previous speeches.

In addition to ending the fact-checking program, Meta plans to recommend more political content to users and simplify its content policies by removing restrictions on controversial topics. The company also intends to relocate its content moderation operations from California to Texas, addressing concerns about potential political biases, Zuckerberg announced in the video.

These policy changes have garnered mixed reactions. House Judiciary Committee Chair Jim Jordan praised Zuckerberg for dismantling the company’s “censorship-prone” fact-checking operation, urging other tech giants like Google to adopt similar measures, the New York Post reported.

Conversely, critics warn that reducing content moderation could lead to an increase in harmful content, particularly affecting vulnerable groups. The UK’s regulator, Ofcom, emphasized the need for Meta to comply with the Online Safety Act, especially concerning harmful content, the Times of London noted.

These developments come amid broader discussions about the role of social media platforms in moderating content and balancing free expression with the prevention of misinformation and harm.